The integration of artificial intelligence (AI) tools into the academic writing process has sparked numerous debates, particularly around the ethical considerations of plagiarism and AI detection. The interest in this topic was evident in the engagement and enthusiasm we saw in our recent webinar on the ethical use of AI tools for academic writing. While we couldn’t address your queries in the limited time we had, we’re committed to helping you understand the relationship between AI technology and research integrity.

Paperpal product leads Nolita Coelho and Charlotte Baptista, who collectively have over 20 years of experience in academia, took a stab at addressing the queries related to the themes of ethical writing in the era of generative AI tools. We plan to address additional questions later in this series. While this is an evolving topic, we hope that it will give scholars a better understanding of how to benefit from AI writing assistants like Paperpal while complying with the ethical writing practices.

Table of Contents

- University/Journal Guidelines

- AI-Generated Content, AI Detection, and Plagiarism Checks

- Paperpal’s AI and Responsible Writing

University/Journal Guidelines

- Where can we check whether the journal allows use of GAI or not?

Charlotte: The author guidelines section of most journal websites should indicate the journal’s stance on the use of AI tools for research. If this seems to be missing, you can always write to the journal to confirm. While there may be variations across journals, the consensus is that AI or AI-assisted tools cannot be credited as an author or co-author. Authorship is a status and responsibility attributable to humans alone.

- What percent of AI content is legitimately allowed by peer reviewed scholarly journals?

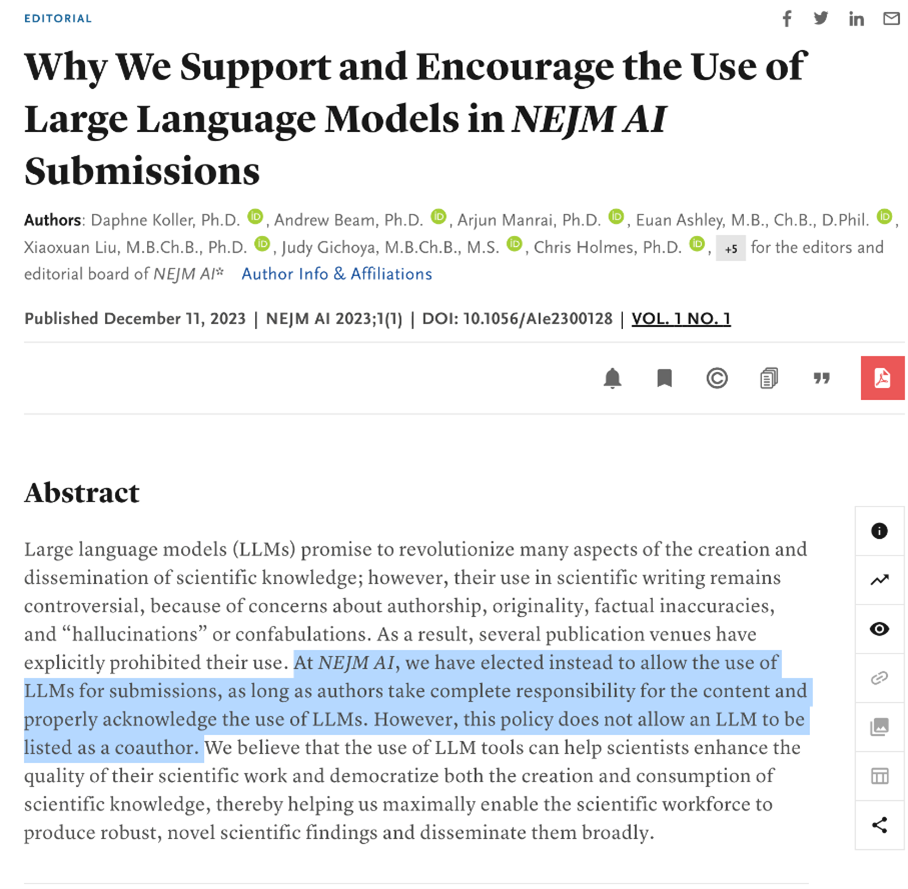

Charlotte: Note that attitudes to the use of AI are still in flux and regulations are largely developing; moreover, AI tools are evolving rapidly. The New England Journal of Medicine recently announced their support of using large language models in article submissions and it’s likely that others might follow this path, recognizing the benefits for authors, but listing AI as a co-author is a definite no.

Nolita: AI detection technology is at a very nascent stage and is known to generate a lot of false positives. Given this, AI detection is mainly used to screen if a piece of text has had AI involvement and, if yes, then it typically requires deeper scrutiny. The objective is not to pass or fail the screening based on percentage threshold of AI use. Put simply, retaining AI-generated text as-is while trying to stay within some kind of percentage threshold means you’re using generative AI tools incorrectly.

- Will journals allow the use of AI such as for plagiarism or grammar check?

Charlotte: Most journals outline their editorial policies around plagiarism; see Nature’s detailed overview. Submissions are screened using software like iThenticate’s CrossRef Similarity check to flag manuscripts with high similarity to published works; these articles then promptly get desk rejected. In cases where the similarity was accidental, it can cause much frustration for the authors and result in publication delays. Like journals, authors also have a shared responsibility in avoiding misconduct. Many institutions, like Cambridge University, outline student responsibilities in understanding how to reference properly, etc. Authors can run their manuscripts through plagiarism detection software as a preventative measure prior to submission, as long as this is not done to mask misconduct but to scan for the possibility of unintentional plagiarism. Similarly, grammar checker tools can be a great way to catch mistakes and reduce the chances of rejection due to language quality. This is particularly true for authors whose first language is not English.

- Can I submit an AI-generated abstract?

- Is generating my abstract or email and just copying it, without changing anything, not unethical?

Nolita: It’s not advisable to submit an AI-generated abstract or any AI-generated content directly. The AI-generated abstract should be based on your original manuscript content. An abstract generator can help you quickly arrive at a short description of your study aims and findings; you can use this to build a stronger abstract. You can consider incorporating the AI content into your final manuscript submission but only after careful review to make sure it reads well and captures the essence of your article.

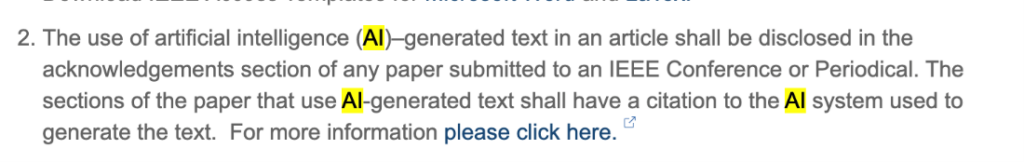

Charlotte: Do not directly use output generated by AI tools. First off, carefully review your institution or journal’s policies with regard to the use of AI tools; if they are allowed, look for guidelines on declaring the use of such tools. The IEEE submission guidelines, for example, says that the use of AI tools needs to be mentioned in the acknowledgements section.

Secondly, using AI-generated ideas or text can speed up the writing process, but avoid using this as-is; this is no different from plagiarism; JAMA, for example, does not permit the use of reproduced materials from AI tools. Further, be aware that hallucination, or made-up facts and details, is a major challenge with generative AI. Always do a thorough check of the content and try to incorporate elements that fit well into your paper, in your own voice.

- Authors using their own ideas and text but slightly improved, i.e., for grammar corrections or paraphrasing using any tool, should this be acknowledged or not? Since it isn’t practical to acknowledge Grammarly, Wordtune, ChatGPT Quillbot, MS Word, etc.

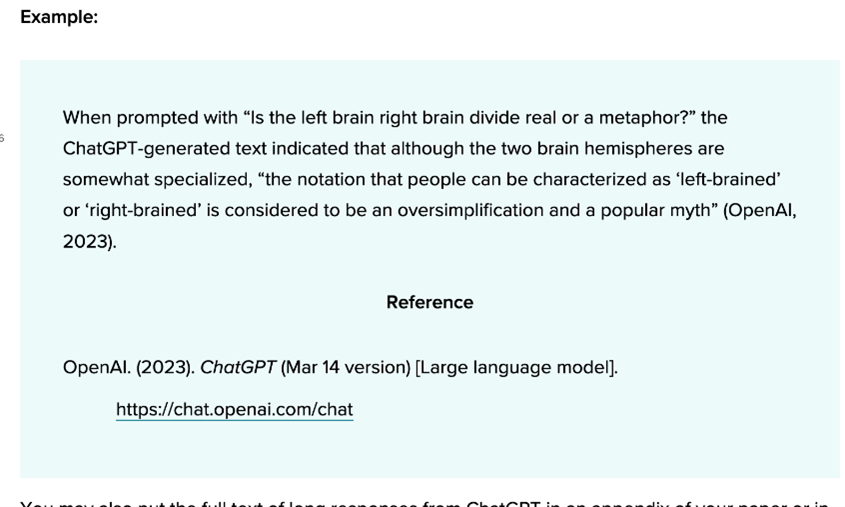

Charlotte: Generative AI can be used for many tasks. Always disclose when you have used such tools even to a small extent. I would suggest referring to the guidelines provided by the journal or by your university on how to cite your use of these AI tools, provided, of course, their use is permitted. MIT, for example, has clearly stated what elements (tool name/version, prompt, response, etc.) to save when using ChatGPT and other AI tools. Universities and style guides now call for properly citing the tools used in a specific format. For instance, the APA has detailed guidelines on how to cite generative AI models.

AI-Generated Content, AI Detection, and Plagiarism Checks

- When you run AI-assisted text through Originality.ai, it tags everything as AI-generated (even though the original idea and writing was yours). How do you address this?

- How can we avoid content being detected as AI by Turnitin?

Charlotte: Firstly, don’t lose heart! Most AI detectors have a high rate of false positives; TechCrunch did a great round up on this. I understand that it can be frustrating if your original work is incorrectly flagged as AI-detected. If this happens and it’s a false positive, you can take some measures:

1. Clarify matters with your supervisor, institution, or journal editor.

2. Document your writing process and present it as evidence.

3. Run your document through a plagiarism check to ensure there are no cases of high similarity.

4. Refine your writing and add citations where needed.

5. Share this feedback with the Turnitin/Originality.ai teams. AI detectors have some ways to go before they are completely reliable!

Note that both Turnitin and Originality.ai have addressed the matter of false positives in their own blogs (1, 2), with great resources for students on how to handle these scenarios.

Nolita: AI detection tools are estimated to have errors in the range of 9%. There are also known cases where AI detectors can get it very wrong:

- The US Constitution was flagged as written by AI

- A team from Stanford showed that writing by non-native English speakers are more likely to get flagged as written by AI.

Given these limitations, certain universities like Vanderbilt are advising faculty against the use of AI detection tools. Open AI withdrew their AI detection tool because of “low rates of accuracy.” Turnitin themselves call out the possibility of false positives with AI detection and how faculty needs to work. Therefore, you may encounter a situation where your text maybe flagged by AI detectors. Don’t fear this situation if it arises. Engage in a collaborative discussion with your faculty or the journal editor and be transparent about how you may have used AI in the preparation of your document.

- Is there any AI that can help reduce plagiarism percentage and ensure better English language in a research paper?

Charlotte: The expectation of reducing the similarity percentage is rather unethical. The AI-generated output may contain phrasing similar to existing sources based on its training dataset, and it’s not unusual that this will get flagged in the similarity report. Here are some things you can do if you find that your AI-assisted document has a high similarity score:

1. If you have incorporated AI-generated text as-is, please review it thoroughly and make it your own. Failure to review AI outputs might result in incorrect ideas or meaning errors being retained in the generated abstract or any other section.

2. Once you’ve made the necessary corrections and deleted non-relevant portions, reorganize the ideas so they flow well.

3. Finally, remember to check that you’ve met word count requirements and included citations from your library if needed.

You may end up discarding 80% of the AI-generated text, but you will end up with authentic, original text—and you’ll still have worked faster than you would have without the AI content to serve as a guideline.

- I heard that recently tutors and lecturers have been using Turnitin (teacher version) to check plagiarism and text generated by AI. Since the examples that you mentioned in the demo are mostly AI-generated. Will it be detected by Turnitin?

- Is AI-generated text plagiarized? Can AI detect paraphrased sentences?

- Can any plagiarism checker tool detect Paperpal software?

- Even if we use our own unique words in the prompt of AI, how would we know that it does not match with any other text? Or if the source is accurate?

- If after literature review we write something ourselves and if we annotate using Paperpal. Will this content be flagged as plagiarism in Turnitin?

Charlotte: Generative AI models may reference ideas or phrasing from the training data set; these snippets might be highly similar to other published works. So it’s not unusual that outputs generated by Paperpal or other generative AI tools will get flagged by plagiarism checkers. Further, we’ve found that even when original content, which hasn’t been processed by AI tools, includes text from published sources or uses common phrasing in scientific writing, this is naturally detected by similarity checkers.

Nolita: There’s a fundamental difference between “Plagiarism checkers” and “AI detectors” as explained in this article. Plagiarism checkers are more objective and look for exact matches with published literature, internet sources, and internal university repositories. Since tools like Paperpal follow standard academic sentence construction, there is a chance that a plagiarism tool might pick individual sentences as resembling published literature.

AI detectors on the other hand look for patterns and variables more common to text written by AI, which can occur even if the content is completely original or can’t be traced to its source. This is one of the reasons why AI detectors are known to incorrectly flag writing from non-native English speakers as written by AI.

- If we cite a statement from a previous study as such in quotes then it isn’t plagiarism?

Charlotte: If you’re citing another study and quoting it verbatim, a gold-standard tool like Turnitin should ideally detect the quotation marks and not flag it in the similarity check. Direct quotes are often used in the humanities, especially in literature where it is common to quote other words or psychology or even where interview participants are often quoted in the study. But they are rarely used in the sciences. If the works you’ve quoted fit meaningfully into your discourse, it can be a great way to showcase the contribution of your work vis-a-viz published literature.

Paperpal’s AI and Responsible Writing

- How does Paperpal specifically focus on the ethics? What does ethics mean in this context? How can I be careful with ethical issues while using this tool?

- I have a question related to whether some of the things we can do with Paperpal are indeed ethical, for example, generating outlines for the literature review. Is this endorsed as ethical by anybody other than Paperpal?

- Please give an explanation why something is ethical, just saying something is ethical does not make it ethical. Based on what is it ethical?

Charlotte & Nolita: We put in a lot of thought when designing Paperpal and Copilot, our generative AI suite. We were extremely cognizant of attitudes around the use of generative AI tools for research and the risks of AI hallucination as well as plagiarism. While we wanted to introduce generative AI into the writing process, we needed to do it in a way that would help our users become more productive while ensuring the AI would not overtake the writing. Here’s how this translated into Paperpal’s Responsible AI guiding principles:

1. Proprietary generative AI models: PaperpalCopilot’s AI does not use ChatGPT. It is based on custom models trained primarily on scholarly content and fine-tuned for academic writing. We also designed the experience with tried and tested prompts so authors could focus on getting quicker, more reliable results with less trial and error.

2. Giving the author full control: Copilot never generates full text, but instead offers outlines or advice to help authors build a draft. Paperpal Copilot provides language or paraphrasing suggestions to speed up the polishing process, but text is processed only a few paragraphs at a time, forcing authors to review the result, add citations, and retain authenticity.

3. Robust data security practices: Because we use our own AI models, we can guarantee the privacy of your research or any generated data and ensure that it is never used for training or shared with third parties.

While using Paperpal or any other generative AI tool for writing, you should always:

- Use the tool to help with research tasks in accordance with your university or journal’s policies.

- Avoid using the output as-is; look out for meaning changes or inaccuracies, refine text in your own voice, and incorporate only the relevant bits into your paper.

- Add citations to external sources where needed.

- Dr. Asad said that we should ensure the sources first, but can we do it the other way around, starting with the AI then checking the sources cited following the idea generation?

Charlotte: It helps to understand what generative AI systems do well and what they don’t do well. Most generative AI systems are not very well-versed in scholarly literature; hallucinated or made-up references is a real problem that all scholars need to be aware of. Critical thinking tasks, such as idea generation, is also best left to you, as the expert. It is essential to put in the necessary work in finding relevant literature, identifying potential research gaps, building a hypothesis, and validating your contribution to the field. What you can do with AI automation is speed up time-consuming tasks, such as getting article recommendations, summaries, brainstorming ideas, and getting a language review. Generative AI tools perform especially well at these types of tasks!

Nolita: Generative AI is a good source of ideas. However, when it comes to crafting an original academic narrative, it’s best that this comes from you, the author, after you have done the work – in-depth literature reviews, identifying necessary gaps, etc. You can use AI to speed up this process by extracting and comparing key findings from various sources.

- I would appreciate it if you cleared it for me that Paperpal doesn’t provide any reference to the text so how can it be addressed?

- Why does Copilot not add references in Introduction and Discussion; without reference no authenticity remains?

Charlotte: Paperpal was not designed to generate references for your writing. As the author, and expert, you are best placed to detect statements that need to be supported with a citation. It’s usually best to add relevant citations from your research library or literature review into the text. Use generative AI for what it does best: speeding up literature review by getting paper recommendations, summarizing articles, extracting information quickly, and understanding concepts. Keep all tasks that involve critical thinking, such as deciding what reference needs to be added where, to yourself, the expert.

- If you rephrase with AI, particularly Paperpal, does it show AI detection and plagiarism?

- Suppose I take and use the paraphrased text generated by Paperpal AI, in my essay/paper, am I not going to be penalized for AI plagiarism or use of direct AI-generated text?

- I am still somewhat unsure on why or how getting AI to paraphrase, trim, or rewrite in a more academic way can be considered as ethical. Even though the original writing is my own, but this “cleaned up” version is not really my work.

- Can you please show a demo of a generated text by Paperpal that can clear the AI plagiarism checker?

- How to use Paperpal to avoid plagiarism? If we rewrite, it gets into plagiarism?

Charlotte: We hope that all our authors will use Paperpal’s AI-generated content as a starting point, a means to an end. Paperpal was not designed to avoid detection by plagiarism checkers but was meant give you something to work with when you’re stuck; you always need to make it your own! In my own experience, starting with an outline helps me overcome the daunting blank page. I may end up reorganizing and not using most of the AI suggestions, and only retain some of the phrasing, but it’s still much easier and faster to get to a strong first draft with the help of AI than without. The end result is still completely my own.

Nolita: I’ve encountered many different uses of Rewrite, and in all of them, I see a more positive use of technology than negative. Let’s start with someone whose first language is not English – they simply put down their ideas in some words that seem familiar so as not to really lose their train of thought. Then they use a combination of language checks and rewrite to polish the language so it sounds native, as would be expected by a journal editor. Yes, many of the “words” used may be borrowed, but the ideas and thoughts expressed are very much their own.

In some cases, I’ve seen users generate multiple rewrites to see if something can be said in a more concise way or check how things are presented and then write a sentence that is their own. Having said that there are cases where I have also observed a clear misuse of paraphrasing tools, where sentences flagged by plagiarism checkers are blindly run through AI and then incorporated without review or appropriate citations. But when this happens at a large scale within a document, plagiarism tools today are fairly equipped to identify such patterns and flag them as problematic.

We’re committed to fostering a community of conscientious academics and hope these responses help illuminate the path toward ethical and effective use of AI tools in your academic writing journey. If you have ideas or queries on this topic or about Paperpal, please write to us at hello@paperpal.com; we’d love to hear from you. Here’s wishing you all the very best; may your future endeavors be marked by both innovation and integrity.

Paperpal is an AI writing assistant that help academics write better, faster with real-time suggestions for in-depth language and grammar correction. Trained on millions of research manuscripts enhanced by professional academic editors, Paperpal delivers human precision at machine speed.

Try it for free or upgrade to Paperpal Prime, which unlocks unlimited access to premium features like academic translation, paraphrasing, contextual synonyms, consistency checks and more. It’s like always having a professional academic editor by your side! Go beyond limitations and experience the future of academic writing. Get Paperpal Prime now at just US$19 a month!